CSIT Nov 24 Mini Challenge

This blog post talks about CSIT Nov 24 Challenge. (Cloud Development Mini-Challenge)

Introduction

Every so often, CSIT will release mini-challenges that may touch on different topics. You can find the up-to-date challenge at their webpage here. From 18 Nov - 6 Dec 2024, it was a Cloud Development Mini-Challenge that focused on Kubernetes. The goal was to find "flags" and submit them to receive the completion badge as shown above. The challenges can all be solved on killerconda and doesnt require you to have your own Kubernetes setup. Thankfully, I have some experience with Kubernetes. Thus, this challenge was a good revision for me. I completed this challenge on 21 Nov 2024.

Walkthrough

Challenge 1

The task asked us to create a deployment and read the application's logs. Fairly simple with the hints that they provide! I won't provide the answer here but the command below will give you the correct response.

kubectl create deployment investigation-unit --image=sachua/task-1:v0.0.1

kubectl logs -n default deployment/investigation-unit | sed 's/.*: //'Challenge 2

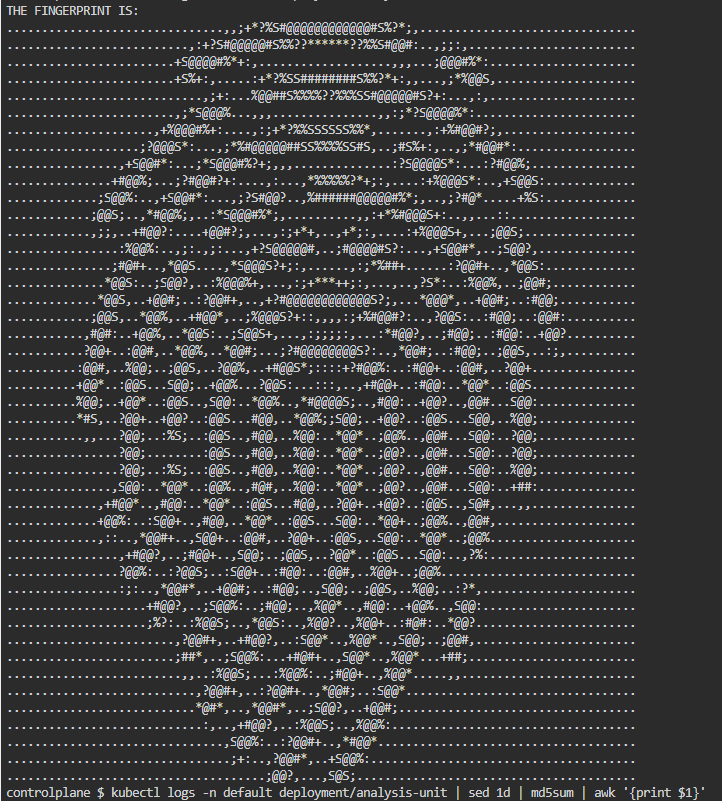

The next task required us the create a deployment that mounts a local path /mnt/data. Personally, I found two ways to solve this. Either create a persistant volume claim or directly use host-path to mount that directory. You should get an output that resembles the image below. Next, you can either save the output into a file and calculate the md5 using md5sum or use the commands provided below.

Method 1 - using persistentVolumeClaims

apiVersion: v1

kind: PersistentVolume

metadata:

name: mnt-data-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /mnt/data

---

kubectl apply -f mnt-data-pv.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mnt-data-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

kubectl apply -f pvc-claim.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: analysis-unit

labels:

app: analysis-unit

spec:

replicas: 1

selector:

matchLabels:

app: analysis-unit

template:

metadata:

labels:

app: analysis-unit

spec:

containers:

- name: analysis-unit

image: sachua/task-2:v0.0.1

volumeMounts:

- mountPath: /mnt/data

name: example-volume

readOnly: true

volumes:

- name: example-volume

persistentVolumeClaim:

claimName: mnt-data-pvc

---

kubectl apply -f analysis-unit.yaml

kubectl logs -n default deployment/analysis-unit | sed 1d | md5sum | awk '{print $1}'

---Method 2 - using host-path

apiVersion: apps/v1

kind: Deployment

metadata:

name: analysis-unit

labels:

app: analysis-unit

spec:

replicas: 1

selector:

matchLabels:

app: analysis-unit

template:

metadata:

labels:

app: analysis-unit

spec:

containers:

- name: analysis-unit

image: sachua/task-2:v0.0.1

volumeMounts:

- mountPath: /mnt/data

name: example-volume

readOnly: true

volumes:

- name: example-volume

hostPath:

path: /mnt/data

type: Directory

---

kubectl apply -f analysis-unit.yaml

kubectl logs -n default deployment/analysis-unit | sed 1d | md5sum | awk '{print $1}'

---

Challenge 3

Lastly, the task is to expose port 80 on the previous two deployed pods and create another command-center deployment. Reading the logs from command-center will return you the answer. It is relatively simple to expose pods and that can be done within the yaml config or using kubectl to expose a nodeport.

kubectl get services

kubectl expose deployment/investigation-unit --type="NodePort" --port 80

kubectl expose deployment/analysis-unit --type="NodePort" --port 80

kubectl logs -n default deployment/command-center | grep -im 1 culprit | sed 's/.*: //'Summary

Its a wrap! Thats the end of the challenge. I would say this is fairly easy compared to the previous few challenges that I have completed. I have the solutions for some previous challenges but I did not record down what was required... It will be tough to understand without some context, hence I probably wont be posting any legacy challenges walkthrough.